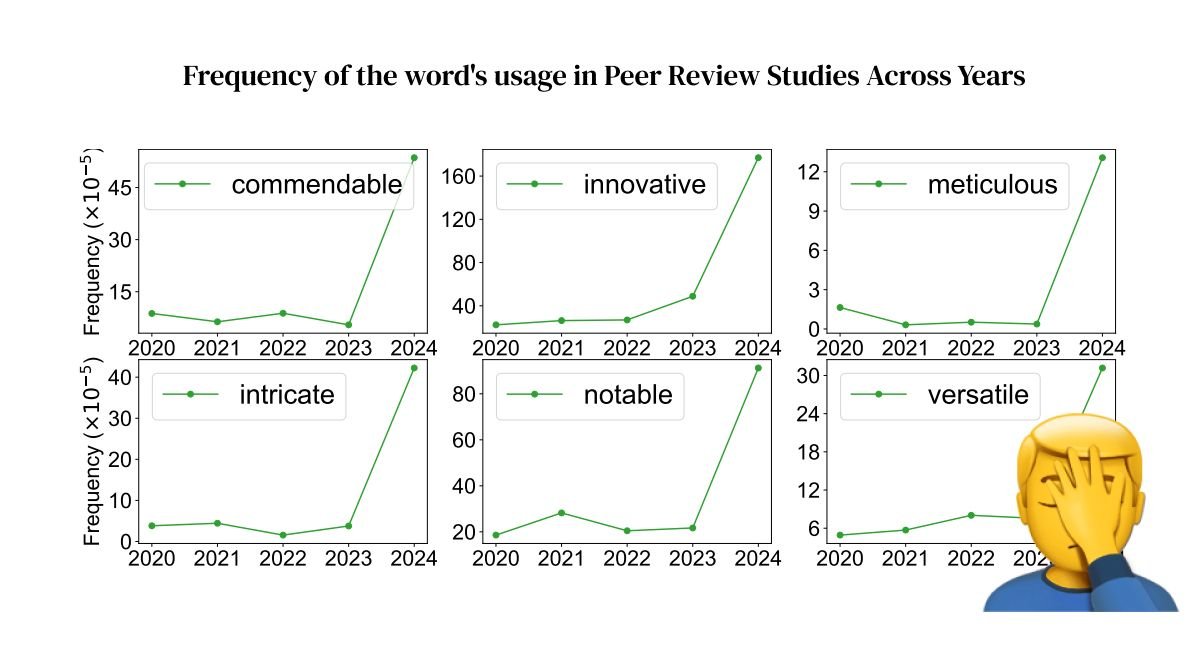

Ever wondered if ChatGPT knows when it's bullshitting you?

Thanks to new advances in Representation Engineering (RepE), it turns out Gen AI can indeed recognize when it’s being less than honest. This breakthrough from Carnegie Mellon University and University of California, Berkeley means Gen AI is now getting a kind of "lie detector" built into its own digital brain.

Here’s how it works, Instead of looking at every tiny piece of the Gen AI’s brain (which is like trying to understand a story by counting all the letters), they look at bigger patterns and how these patterns behave when the AI is thinking. By doing this, they discovered that AI has its own way of representing honesty and dishonesty. This lets them see when the AI's thinking pattern is veering towards a lie.

For example, when an Gen AI model is processing a statement, the researchers can now observe the overall activity and identify distinct patterns that signify truthful or deceptive responses. They found that when AI thinks about honesty, its internal processes align in a specific way, almost like forming a coherent narrative. Conversely, when it's about to lie or hallucinate (a fancy way of saying "make stuff up"), the pattern shifts significantly.

This is huge because it means we can make Gen AI more trustworthy and safe. But here’s the twist—if AI knows it’s lying, is it just going to get better at hiding it?

The full study is 👇

https://arxiv.org/pdf/2310.01405

hashtag#AIhashtag#GenerativeAIhashtag#RepEhashtag#AIsafety